Real-Time Sound Classification

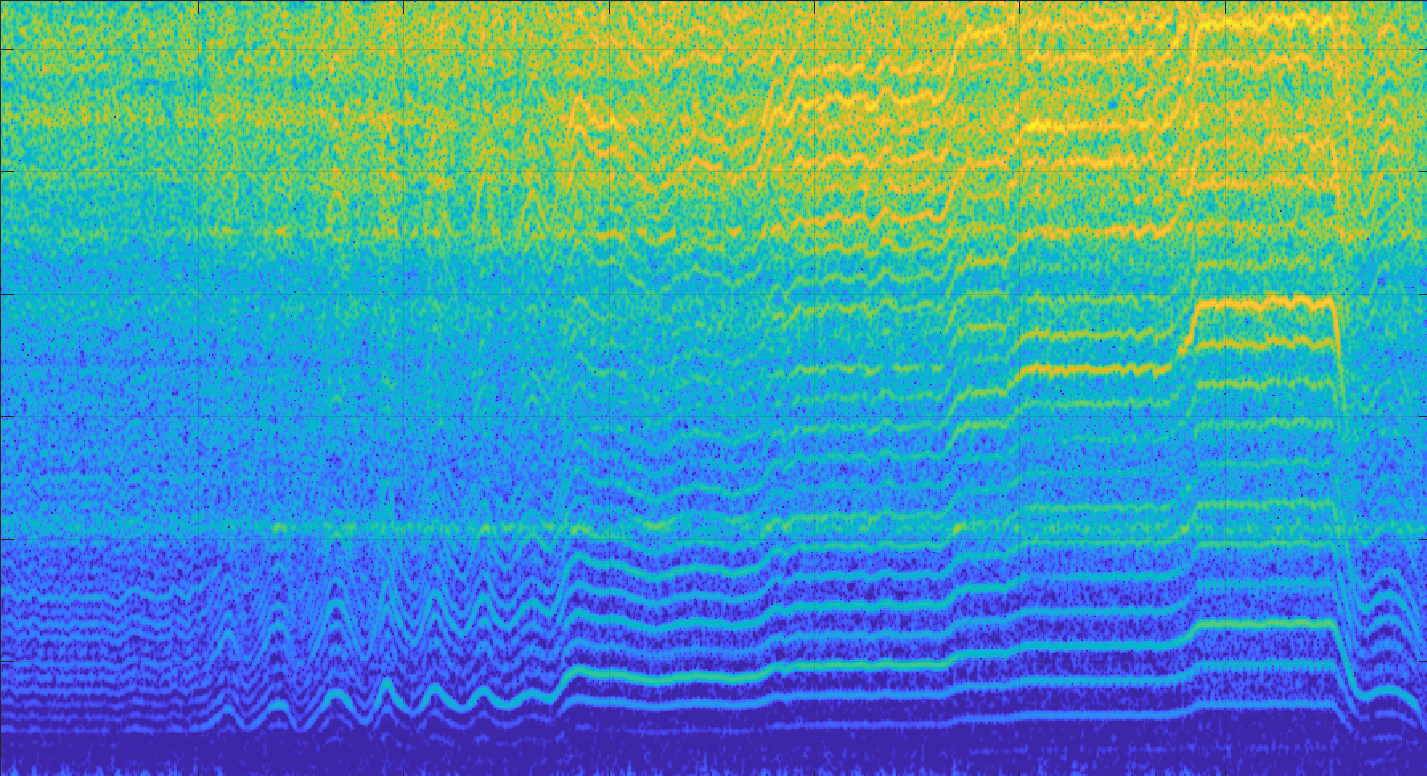

SoundScape can classify environmental sounds with an accuracy of 93.4%. We do this using an ensemble of Pytorch models trained on an augmented dataset. This lets us classify sounds nearly anywhere, be it indoors, outdoors, and noisy environments. We do this under 0.2 seconds of latency, as we use a GPU accelerated environment.